Agentic AI vs Generative AI

BLOG

11 min read

Agentic AI vs Generative AI: Exploring the Differences and Overlaps

Agentic AI vs Generative AI is a trending topic today, and many are eager to explore their individual potentials, differences, similarities, and how both can be leveraged for seamless end-to-end process automation. Organizations are already incorporating Generative AI into their daily work and encouraging employees to use it as much as possible. Now it's time to upgrade our tech to smarter version of AI that has the capabilities of GenAI and beyond. The new era of automation is Agentic AI.

As per the report by Gartner, at least 33% of enterprise software applications will include Agentic AI.

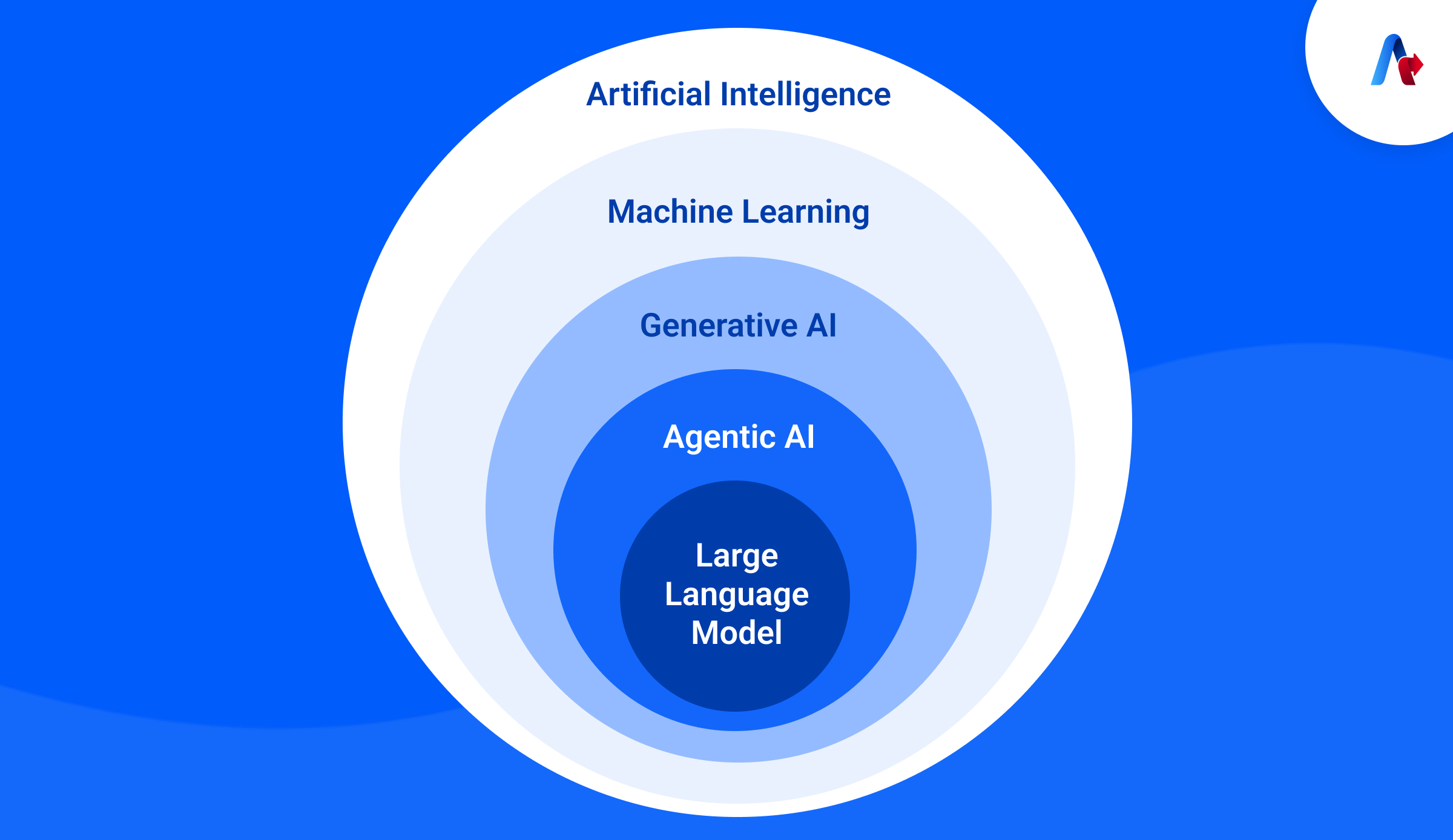

In this blog, we will learn how AI Agents are different from Generative AI, where do they potentially overlap and the role of Large Language Models (LLMs) in this. Let’s begin by understanding what these technologies are and their key features.

What is Generative AI?

Generative AI is a subset of artificial intelligence capable of creating new content, such as text, audio, images, music, and code. It uses algorithms to identify patterns, understand grammar, context, and style, and generate meaningful outputs. Once it is trained on specific datasets, it can produce new data within the same context.

Key Features

- Diversity in content: It has the capability to generate different types of media such as text, images, and music.

- Pattern Recognition: It can recognize patterns based on data it is trained on.

- Innovation: It can create new and creative content in the same context.

What is Agentic AI?

Agentic AI is basically technology in action that enables businesses to make decisions and act on them autonomously. It can also learn from interactions, enabling the new automation era known as Agentic Automation. Generative AI is the key enabler for Agentic Automation.

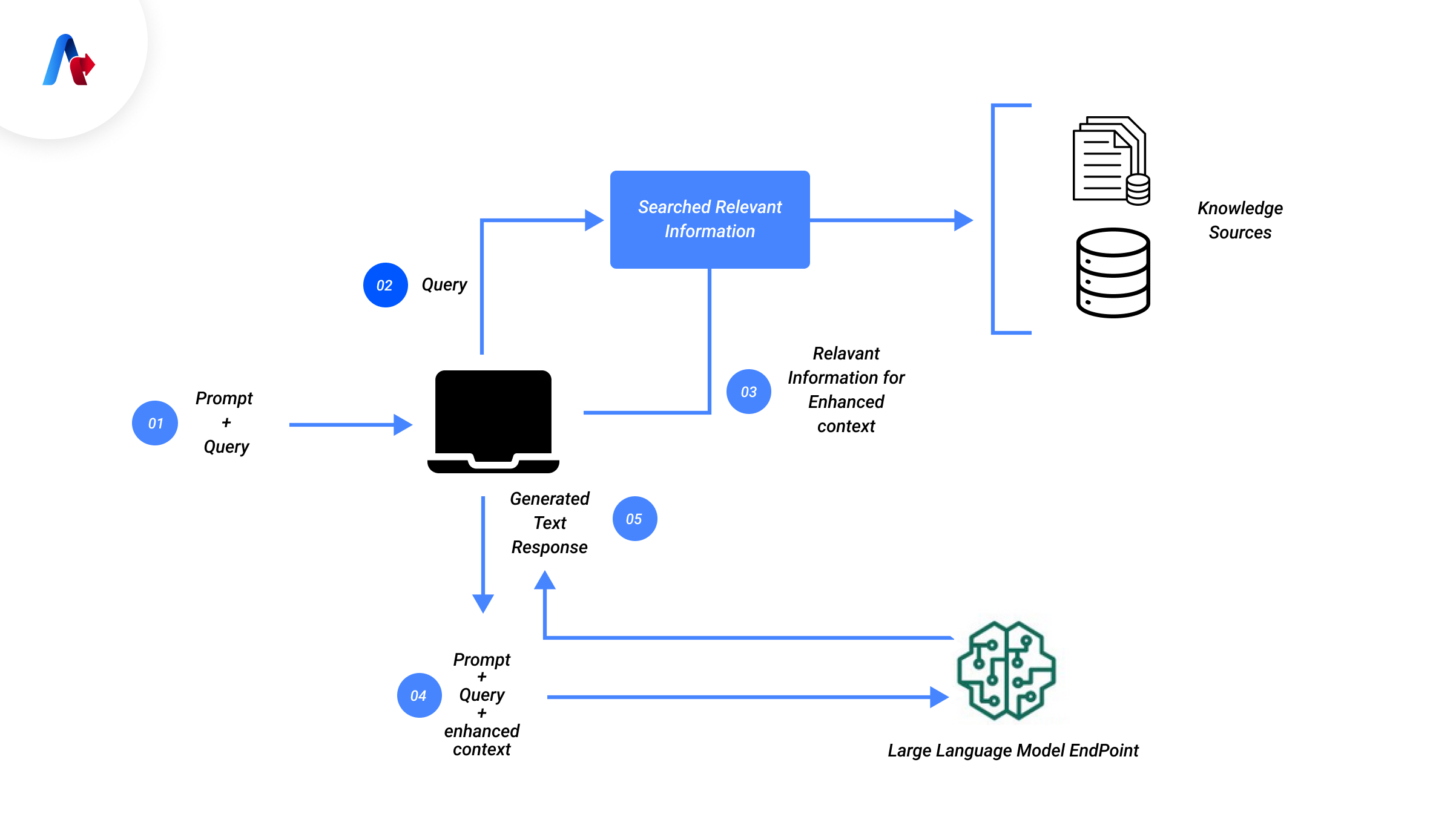

At the core of an Agent is usually an LLM that first determines the inputs, understands that natural language input, and then devises a work plan for a goal that has been established for the agent. Agentic Automation would not be possible without Generative AI Models.

An agent is the entity which can understand, act and perform complex tasks.

Key Features

- Decision making: Agents will have the ability to make decisions independently

- Context Grounding: Agentic AI can interpret information based on situations, the history of the user, and underlying data provided to it. This data may be in the form of structured database tables, or unstructured documents.

- Collaboration: Multiple agents can work together to achieve the completion of a task or process

- Goal-oriented: Each agent has a specific goal for which they are designed. Example: Language detection agent to detect the language of your input data. It should not perform any other task.

8 mins read

How AI Agents-Powered RPA is Optimizing Revenue Cycle Management in Healthcare?

Find Out Now!Gen AI vs Agentic AI

Now that you know what Gen AI and Agentic AI are, let’s find out the key differences between these two technologies:

| Feature | GenAI | Agentic AI |

|---|---|---|

| What it does | Excels at creating new content such as text, audio or images. | Agentic AI is action oriented, and it goes beyond content creation and can outline a plan for some tasks to be performed. Generative AI Agents take independent decisions and act. |

| Use | GenAI can craft innovative ideas, narratives, and work plans. | Can analyze a situation, design strategies, and take action to achieve its goal. |

| How it works | Generates output which relies on human input and guidance. | Operates independently and adapts to changing environments and learns from experience with minimal human interaction. |

| Focus | GenAI’s focus is on creating. | Agentic AI focuses on doing. |

| Output | New content, analysis of existing content. | Series of actions or decision. |

| Example | To create marketing content. | To publish content on different marketing channels. |

Go beyond content creation— enable AI to plan, decide, and act independently.

Schedule a Demo!Large Language Models: The Core of GenAI & Agentic AI

Large Language Models (LLMs) are a specialized application of Generative AI, which is designed to process and generate human-like text using Natural Language Processing (NLP).

LLMs are so popular that 67% of businesses use GenAI powered by LLM for quick content creation.

These models work with text-based data, are trained on extensive datasets, and it is a perfect choice for tasks such as content creation, language translation, and responding to prompts. While LLMs are a part of Generative AI, they focus solely on text-related tasks.

Key Features

- Text Centric: Focused exclusively on understanding, generating, and analyzing text.

- Large training dataset: Trained on large datasets such as books, web contents

- Predictive: It can predict the next word in a sentence or generate meaningful responses based on input prompts.

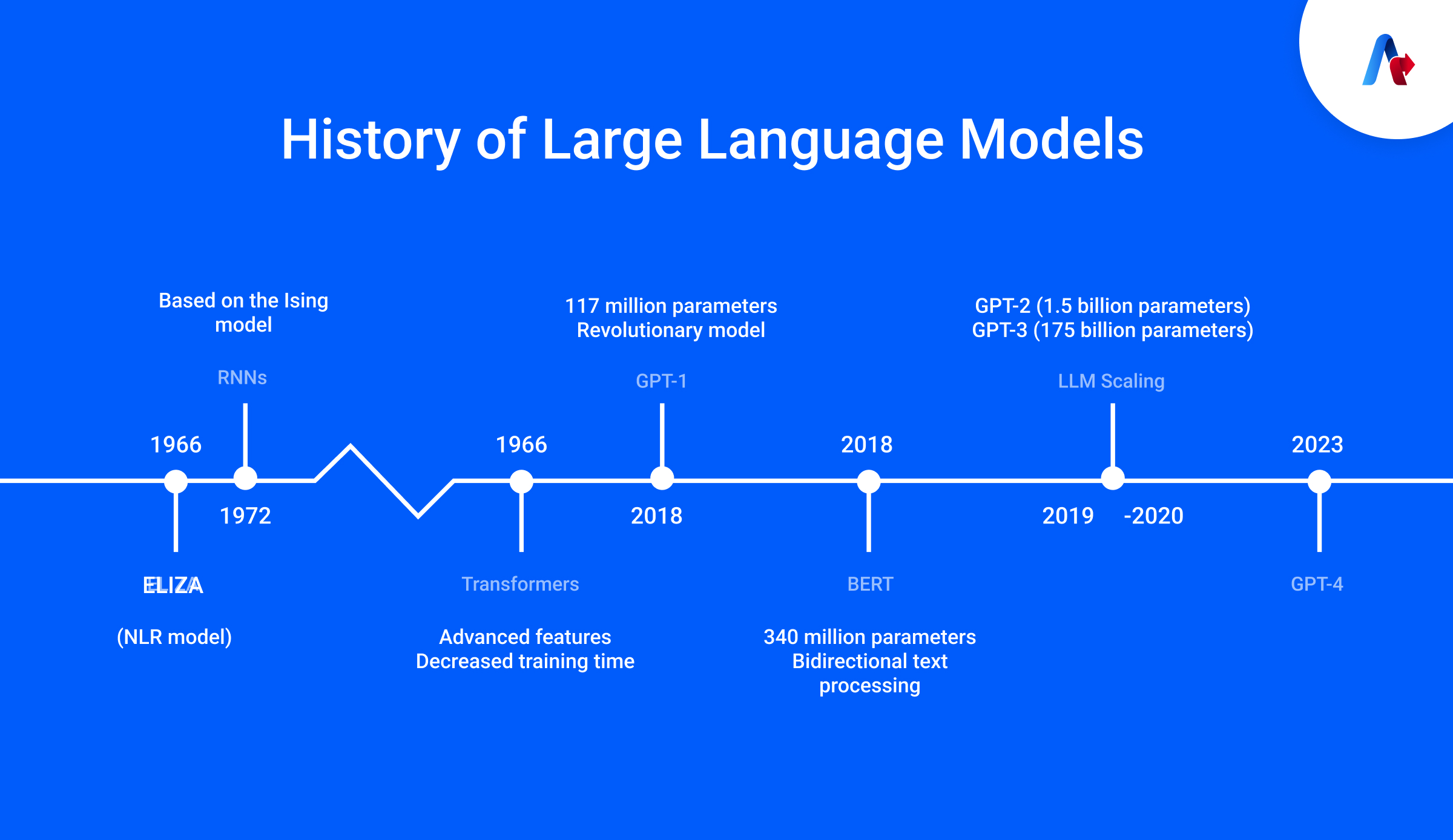

The Evolution of Large Language Models

In 1966 the journey started with the first model called ELIZA, which was an NLP model designed to provide preprogrammed responses based on keywords. Later, Recurrent Neural Networks (RNNs) was the model that could predict the next word in a sentence. However, the performance was still far from what we have today.

In 2017 when Google introduced the Transformer architecture in their research paper, which was called "Attention is All You Need", proved to be a breakthrough in the foundation for more advanced LLMs. Later in 2018, OpenAI developed GPT-1, featuring 117 million parameters, which was a significant step forward.

The introduction of BERT in 2018 had the capability to process text in both directions, offering better context understanding than GPT 1 which was unidirectional.

In 2019, this evolution gained momentum when GPT 2 was released which was more advanced and captured widespread attention. It started a wave of AI innovation.

In 2023, GPT 4 was launched, marking a significant milestone in the evolution of large language models.

How LLMs Work: Breaking Down AI-Powered Language Processing

AI-powered LLM is transforming the way machines understand and generate human language. Dive in to discover how it works:

1. Tokenization

Tokenization is the process of breaking down sentences or words into small tokens.

A token represents a single word or part of the word for a large word, which is a segment of text.

For example, short words like "Hi" or "There" are treated as single tokens. However, longer words, such as "Summarization," will be split into multiple tokens like, "Sum," "mark," and "ization," into three tokens.

Each AI model uses a different tokenization process based on prefix suffixes to understand language and context.

2. Embedding

Tokens are converted into vectors having magnitude and direction into numerical vectors, this will be useful for computers to understand the meaning of each word and their relationships with other words. These vectors are stored in a vector database, which helps the model to identify how closely related one word is to another based-on magnitude and direction.

Based on this model we will be able to predict the next word. For example, the words Prince, Queen and Throne are much closer to the word King than the word Apple. Converting words into vectors allows the models to understand the relationship between the data provided to it.

3. Transformer

Transformers identify the context of a sentence using an attention mechanism. This algorithm evaluates how much each word, and its position contributes to the overall meaning of the sentence. It then generates an output matrix, which is processed and converted into natural language. The quality and relevance of the output depend on how the model was trained quality of data used in training a model.

Common Challenges of Large Language Models

Though LLMs prove to be of great use for businesses across industries, they come with some common challenges.

1. Struggles with Logic and Reasoning

LLM struggles to generate more logical and reasoning-related results. They have a chance to fail to generate responses where more reasoning and logical solutions are required.

2. Bias in Information

LLMs are trained on massive datasets, which can generate biased response which will be problematic, especially when used in sensitive applications.

3. Knowledge Limitation (Based on Training Data)

LLMs are limited by the knowledge available based on data they have been trained in and with time limitations, meaning they may be unaware of recent events, updates, or information that surfaced after their last training session. It may lead to inaccurate responses if queried about recent topics.

4. Hallucination (Confident, Yet Incorrect Responses)

One significant issue with LLMs is "hallucination"—the phenomenon where the model generates information with high confidence, but entirely wrong.

5. High Hardware and Computational Demands

Training and fine-tuning LLMs require high computational power and hardware support, leading to high costs.

6. Ethical Challenges (False or Infringing Content)

LLMs can be used to create fake news, deepfakes, or other deceptive materials.

What challenges do you think businesses might face while adopting Agentic AI?

Talk to our experts today!Agentic LLMs vs Traditional LLMs

| Feature | Traditional LLMs | Agentic LLMs |

|---|---|---|

| Working | They are dependent on user input and does not have ability to understand the real context as the generate output on training data | They work step by step to achieve certain goal, analyze the situation making them more realistic |

| Decision making | No decision-making ability | Have independent decision-making ability |

| Reasoning capability | Limited to handle simple tasks and limited to single prompt | It can break down problem in small pieces and can suggest different possibilities and learn on its own for tackling tasks |

| Memory | Have short memory, after long duration passed, it forgets details | It can remember past conversations, context and preferences |

| Interaction | Limited to simple conversation | They can connect to external systems to gather information and perform tasks |

| Customization | Only possible through prompt engineering | User can build agent with specific goals and customize it accordingly |

| Multi Agent co-ordination | Focuses on one agent at a time | Multiple agents work together to achieve a goal |

| Efficiency | Use lot of resources to generate accurate output and need detailed prompt engineering | Ability to plan remember and act can make better use of resources |

Which AI model best suits your needs, Generative AI or Agentic AI?

Let’s discuss the right fit for your business goals.Generative AI and Traditional LLMs

| Feature | Generative AI | Traditional LLMs |

|---|---|---|

| Type of Content | Capable of creating text, music, images, code, and other content types. | Specializes in text-based tasks like text generation and language translation. |

| Scope | Broader scope, covering multiple data types and outputs. | Narrower scope, focusing only on text. |

| Data Requirements | Requires diverse datasets for training. | Requires large text datasets for training. |

| Complexity | More complex due to the need for diverse outputs. | Less complex, limited to text processing. |

| Use Cases | Healthcare, education, training, customer support. | Code generation, R&D, e-commerce personalization. |

| Technical Expertise | Needs domain-specific knowledge and ML expertise. | Requires less expertise, as most LLMs are pre-trained. |

Choosing the Right AI Technology for Your Needs

Given how quickly AI technologies are developing, it is clear that both Generative AI and Agentic AI have the potential to revolutionize a variety of industries. While Generative AI has changed the dynamics for content creation by enabling companies to create unique and customized materials, Agentic AI goes one step further by empowering systems to do activities and make decisions on their own, bringing in the era of Agentic Automation.

AI is here to stay, and the choice of which technology to use will rely on the particular requirements, objectives, and vision of your company. The options are unlimited, whether your goal is to improve content creation, encourage more intelligent decision-making, or automate difficult tasks. Collaborating with a trusted UiPath implementation partner can help in seamless transition with strategic roadmap. Talk to our experts today!

See AI in Action

Start Now!