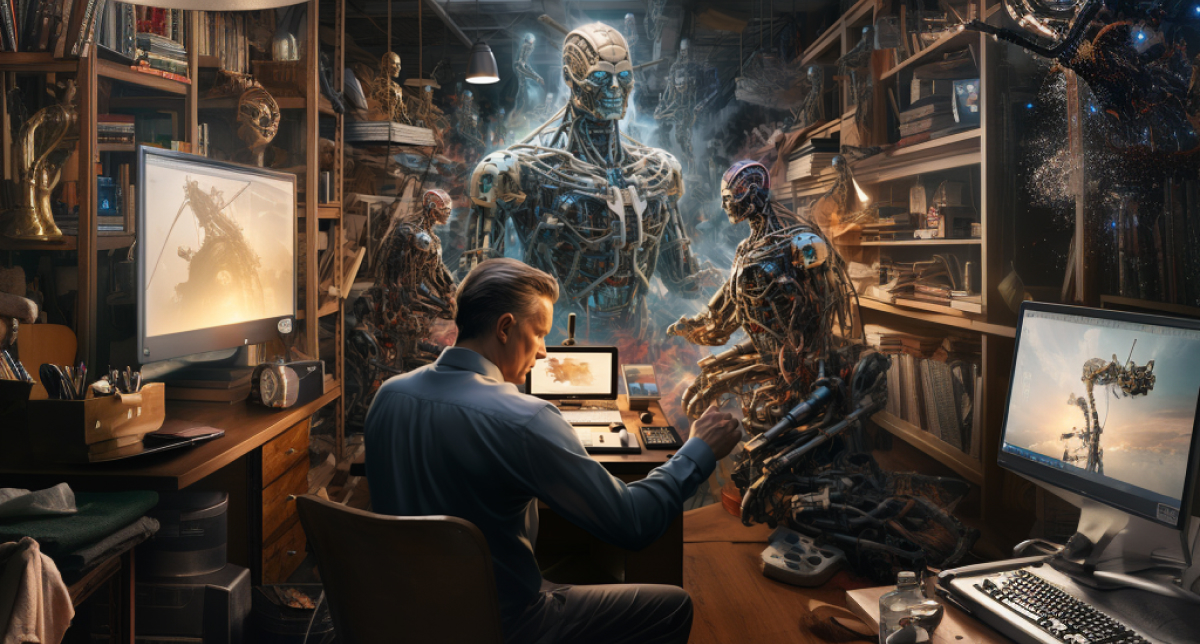

Has AI Become Too Human? Time for a Reality Check on Large Language Models

Large Language Models (LLMs), such as OpenAI’s GPT, have gained unprecedented attention for their ability to generate human-like text,

automate business processes, understand context, and perform various language-related tasks.

However, as these models approach new levels of sophistication, concerns have arisen regarding the blurred boundaries between AI and human capabilities. The question looms: Has AI become too human, and is it time for a reality check on LLMs?

“AI is more dangerous than, say, mismanaged aircraft design or production maintenance or bad car production, in the sense that it is, it has the potential — however small one may regard that probability, but it is non-trivial — it has the potential of civilization destruction,” Musk said in his interview with Tucker Carlson.

The Rise Of Human-Like AI

Large Language Models have come a long way since their inception. They can generate coherent contextual information, answer questions, write code, translate languages, and even compose more artistic work such as poetry. Their output often exhibits nuances that closely mimic human expression, making distinguishing between AI-generated and human-generated content challenging. This uncanny resemblance has spurred discussions about AI’s ethical and practical implications.

Also, a concerning issue emerges on the biased output of the LLM Model. Trained on diverse datasets, LLMs inadvertently absorb societal biases present in the data, leading to content that reinforces stereotypes and prejudices.

This can have real-world impacts, influencing decision-making and perpetuating misinformation. Addressing this challenge requires a multi-faceted approach, including diverse training data, bias detection tools, fairness constraints, and user education. By acknowledging and actively countering biased outputs, we can harness the potential of LLMs while striving for equitable and responsible AI integration.

The Ethical Dilemmas

AI’s content closely resembles humane content, and that fact alone raises many ethical concerns. One of the major problems is the spread of misinformation. The Engines can fabricate narratives to sound convincing, leading to confusion and manipulation of the masses, posing a serious threat to public trust.

Furthermore, generating content that is virtually indistinguishable from human authorship could lead to intellectual property and copyright issues. Who owns the rights to AI-generated content, and how should it be attributed? As AI becomes more sophisticated, these questions become even more pressing.

Impact On Human Labor And Creativity

AI advancements also raise concerns about the future of human labor. The displacement of content creators has already begun and now grimly awaits to replace content creators, editors, and writers. Automation offers efficiency but at the cost of loss of pay to many people.

Moreover, the rise of AI-generated content begs what creativity means. Can AI truly be creative, or is it merely mimicking patterns learned from existing human-created content? This blurring of lines challenges our understanding of creativity and the unique qualities that make human expression valuable.

The Reality Check

Amidst the excitement surrounding AI’s human-like capabilities, it’s essential to maintain a realistic perspective. Despite the impressive feats LLMs can accomplish, they are still tools developed by humans and trained on human-generated data. They lack genuine consciousness, emotions, and intentionality. The outputs they produce are a result of pattern recognition and statistical analysis rather than genuine understanding.

Recognizing these limitations is crucial to harness the potential of AI responsibly. Transparency in disclosing AI-generated content, educating the public about the capabilities and limitations of AI, and implementing safeguards to prevent misuse are vital steps toward a more balanced integration of AI into society.

Conclusion

The advancement of Large Language Models has undoubtedly pushed the boundaries of what AI can achieve in language processing. While AI-generated content might resemble human expression, it’s important to remember that these models lack true consciousness and understanding. Ethical considerations, potential job displacement, and redefining creativity are all aspects that warrant careful examination as we navigate the integration of AI into our lives.

A reality check on LLMs reminds us to approach AI’s capabilities enthusiastically and cautiously, ensuring that we leverage this technology to improve society while upholding our human values.